Programming

The act of creating useful tools that I know will make people's lives easier, and fun games that I know people will enjoy, is pure bliss. Even when I'm just messing around to learn a new library or figure out how a new system works, programming gives a sense of adventure unlike anything else.

Here are some fun projects I've built for work, as coursework, and in my own spare time:

Hobby Projects

Multi-Agent Logistics Simulation

Distributed multi-agent logistics simulation. An arbitrary number of AI agents connect via the network to a server to get a procedurally generated city map and information about what deliveries have been requested. Agents use A* pathfinding to find the optimal routes to pick up items and execute deliveries. The server performs task distribution according to agent capabilities. Agent movement is simulated, and a visualization of the world state is constantly shown to the user.

Agent code is written in Python (each agent is a single instance of a running script), and the server/visualization is written in Unity with C#.

Japanese Mushroom Dictionary

Mobile app for iPhone (link) and Android (link). Advertising copy follows:

The Japanese Mushroom Name Dictionary lets you look up Japanese common names (wamei) and gives you the equivalent scientific binomial, or vice-versa. You can look up over 5,000 mushrooms, lichens, and slime molds! Save your favorite mushrooms to your Favorites list and browse through them at any time! All names are based on the latest mycological research, with authors and paper names given. Mushroom pages link to Japanese and international websites where you can view further details and photos! Made with Unity and C#.

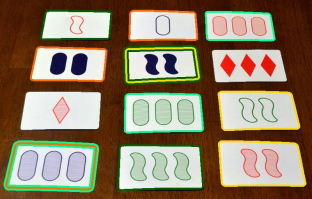

SET Solver

This application uses a custom computer vision algorithm to solve the game of SET. You supply an image of the current state of a SET game, and it will find all of the sets (in a fraction of a second) and label them with colored outlines. It works for live video as well. Written in Python with OpenCV.

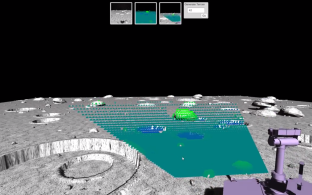

Lunar Rover Sensor Data and Terrain Simulation

This simple simulation allows the user to drive a rover around a procedurally-generated lunar surface, and to collect simulated sensor data from cameras and a depth sensor. Made with Unity and C#.

Dining with Darwin (with Mike Gailey)

This comical game, developed over 72 hours as a submission to the Butterscotch ShenaniJam 2019, allows the player to play as Charles Darwin as he eats a number of new and exotic creatures. (This is based on real history; Charles Darwin was infamous for tasting all of the new species he discovered.) The game features procedurally-generated worlds and Boids-like multi-agent flocking. Made with Unity and C#.

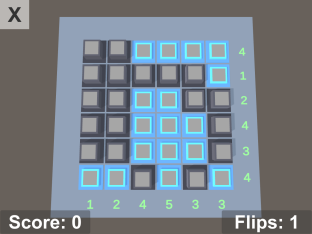

Elite Detection and Correction Squad

This is an unfinished puzzle game which takes place inside an on-board CPU in space. In the game, which is based around the theme of error-correcting codes, players solve grids of puzzles with their knowledge of parity bits and checksums. Made with Unity and C#.

Arduino Simon Says

An re-implementation of "Simon Says" built from scratch and programmed on Arduino, with LEDs and a piezo speaker to provide audiovisual feedback. Also includes a sound engine that I wrote to play music borrowed from Super Mario Bros. Controlled with an SNES controller. Hardware switches enable multiple modes and difficulty settings.

Arduino PID Motor Controller

An implementation of an angular velocity controller for a motor using a photoelectric sensor for feedback. Uses PID control (with gains determined by Ziegler-Nichols) to drive a motor at a specified angular velocity profile. Written in C++.

At Heart's a Stereo: HTML5 Data Visualization

This is a data visualization that I wrote from scratch over the course of a few days, with JavaScript using HTML5 Canvas. Data is visualized in the style of a 2D stereo equalizer, where bar height and color can represent two variables. Text is dynamically sized and animations let you see how data changes over time. The custom data format allows users to add their own data easily. The concept for this visualization was created by Chia-Yi Hou.

Blinded by the Beat

Windows Executable (4.5 MB) | Source (4.7 MB)

An experimental application that I made for SEAHOP, this is a puzzle game which uses no graphics. Players must rely entirely on audio to solve the puzzle. Once solved, an answer is revealed graphically.

Give it a try--stereo headphones help a lot. Controls are WASD or arrow keys, and space.

This project was written in C++, using the SDL library for multimedia. It requires the .NET framework to run.

T-Rex Vision Motion Detector

Xcode 4 Project (Mac OS X) (37 KB)

This is a simple application that takes a video stream (e.g., from a webcam) as input and outputs any pixels that have changed significantly over the past several frames, resulting in a sort of "T-Rex vision"-style motion detector (as inspired by Jurassic Park).

Image data is stored in a ring buffer, and when the standard deviation for historical intensity values for a pixel exceeds the draw threshold, that pixel is assumed to have changed significantly and is drawn. The ring buffer size and draw threshold are adjustable.

This project was written in C++, using the OpenCV library. This project requires the OpenCV library to run.

SEAHOP Puzzle Competition Puzzle Webpages

For the SEAHOP puzzle-solving competition, I wrote a dynamic website where teams can log in, and only certain puzzles are visible to them, determined by which puzzles they've already solved in the competition. This makes it so that all teams follow the same general order of puzzles, which allows the puzzle designer (me) to ramp up difficulty in a fun way.

I also built an administrative console for event staff, to make updating the puzzles each team has solved easy and fast.

This project was written in PHP, interacting with a MySQL database. See the SEAHOP page on this site for more information about SEAHOP.

Professional Projects

Various projects (for Astroscale)

Numerous internal projects, including:

- Full 3D simulator to generate synthetic data for mission development and testing (lead developer)

- Web-based interactive simulation showing satellite position and path over time (lead developer). Screenshot here.

- Pose estimation for orbital rendezvous, using novel computer vision and sensor fusion methods (lead developer)

- Multi-user HoloLens AR visualization tools for hardware discussions and public outreach (lead developer)

- Telemetry and telecommand parsing tools for integrated avionics testing (lead developer)

- 2D and 3D data visualization for large (Monte Carlo) simulation datasets (lead developer)

Caspian (for NASA JPL)

The 3D visualization tool for the Perseverance rover's autonomous driving algorithm. Originally used before Perseverance launch to develop the on-board algorithms, Caspian is now used for analyzing the Perseverance rover's autonomous drives on the Martian surface. Made with Unity and C#, along with support scripts in Python. I was the lead developer, and worked closely with the flight software team writing the autonomous driving system.

SlingShot (for NASA JPL)

This was a tool which allows a presenter and multiple other users to see Europa Clipper flybys of Jupiter in 3D. A connected server and cell phone app allow the presenter to control view for all users. Used to conduct mission review during the Europa Clipper Mission Systems PDR. Made with Unity and C#, along with a server and frontend written in Python and Javascript, respectively. I was the lead developer, and worked closely with the Europa Clipper Mission Systems team.

OnSight (for NASA JPL)

OnSight is a mixed-reality software tool that allows scientists and engineers to virtually walk on the surface of Mars.

As NASA JPL states here, "OnSight uses imagery from NASA's Curiosity rover to create an immersive 3D terrain model, allowing users to wander the actual dunes and valleys explored by the robot. The goal of the software, a collaboration between Microsoft and JPL's Ops Lab, is to bring scientists closer to the experience of being in the field. Unlike geologists on Earth, who can get up close and personal with the terrain they study, Martian geologists have a harder time visualizing their environment through 2D imagery from Mars."

I built several features for OnSight, including a realistic shadow simulation based on time of day and the interface and backend for navigating to any site along the Curiosity rover's traverse.

VR Orbital Design Tool (for NASA JPL)

This was a tool to allow a JPL mission designer to design an orbital trajectory in an interactive, physical way. The VR software links together with a separate orbital solver (developed by JPL mission designers) in order to find orbital trajectories through the waypoints set in VR. Made with Python, Unity and C#, using HTC Vive. I was the lead developer, and worked closely with the JPL mission designers.

3D Telepresence Interface for Robotic Manipulation (for NASA JPL)

In this project, I led development of an intuitive and innovative 3D interface for robotic calibration and manipulation. This interface permits extremely fast calibration of sensors and manipulator position (on the order of ~30 seconds), and allows precise manipulation in dynamic environments with an easily expandable library of objects and interaction methods. The tool was written in C#, and used the Unity game engine to generate the 3D environment.

Lunar Rover Ground Station

"Moon Shot" documentary feature

Over the course of six months, I worked with the Google Lunar XPRIZE team Hakuto in Sendai, Japan, to build a fully-featured ground station for their "Moonraker" lunar rover, in preparation for a future mission to the Moon. The software is equipped with a number of both standard and advanced features, including a robust fault detection and alarm system, 3D environment viewing, and several innovative types of telemetry analysis and visualization. It was used for hundreds of hours of laboratory and field tests.

Various projects (for SpaceX)

At SpaceX, I worked on testing the on-board software for the Dragon space capsule, running tests in both a fully virtual environment as well as on hardware-in-the-loop test tables. I wrote and optimized numerous tests to assure mission safety on the Dragon's approach and docking to the ISS.

Various projects (for Nintendo of America)

Some of the projects for which I led development at Nintendo included:

- A suite of text formatting tools to predict in-game text display on handheld hardware (Python, C#)

- 3D graphics and inverse kinematics demo on handheld hardware (C++)

- Puzzle game demo on handheld hardware (C++)

- Utility to check guideline compliance in exported images (Python)

- Various small samples and proofs of concept (various languages)

Academic Projects

Graduate Thesis (Master of Science in Aeronautics & Astronautics, University of Washington)

"Techniques for Fault Detection and Visualization of Telemetry Dependence Relationships for Root Cause Fault Analysis in Complex Systems"

This thesis explores new ways of looking at telemetry data, from a time-correlative perspective, in order to see patterns within the data that may suggest root causes of system faults. It was thought initially that visualizing an animated Pearson Correlation Coefficient (PCC) matrix for telemetry channels would be sufficient to give new understanding ; however, testing showed that the high dimensionality and inability to easily look at change over time in this approach impeded understanding. Different correlative techniques, combined with the time curve visualization proposed by Bach et al (2015), were adapted to visualize both raw telemetry and telemetry data correlations. Review revealed that these new techniques give insights into the data, and an intuitive grasp of data families, which show the effectiveness of this approach for enhancing system understanding and assisting with root cause analysis for complex aerospace systems.

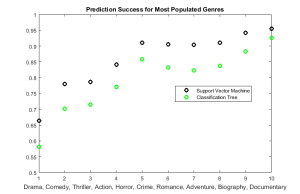

Machine Learning Classifier for Determining Film Trailer Genres (with John Fuini and Noel Kimber)

Research paper | Poster | Github repo

This is a pipeline for calculating a feature set, based on video and audio content, from a given movie trailer, and then classifying automatically by genre. We constructed our feature set from a combination of computer vision techniques and volume/frequency analysis. After this, we applied Support Vector Machines as well as Binary Decision Trees as classifiers. Our cross-validation testing showed an accuracy of better than 90% for some of the more common film genres.

Telemetry Display and Fault Diagnosis Tool (with Nick Reiter)

Frontend Explanation

Backend Explanation

Github repo | Explanatory poster

This data visualization tool is an experimental testbed for a number of techniques to display detailed telemetry data from an aerospace system, towards the goals of achieving a quick understanding of the current state of the system and troubleshooting any complex faults that may occur during system operation. Various techniques, backed by research and driving factors related to the needs of aerospace fault analysis, are employed within this interface, including a degree-of-interest tree modeling the data channel hierarchy, plots for real-time data display, and a correlation matrix showing relationships between various telemetry channels.

The client side of this application was written in JavaScript, using the D3.js library for various visualization-related components. In addition, data is generated dynamically via a program written in Python, and can be loaded from a number of data sources and distributed to multiple clients at once.

Ball Tracker and Predictor for Juggling

This is a Python application that uses the OpenCV library (version 2) to track the movement of balls during a video. It tracks the balls with a combination of color filtering and k-means clustering. Once the balls' positions are determined, their velocities are estimated by comparing consecutive frames. Then numerical integration (Euler's method) is used to find a predicted trajectory through space (subject to gravity). Ultimately, these trajectories are updated with new data from the image processing side, using a weighted filter to combine the predicted trajectory with the observed position and velocity.

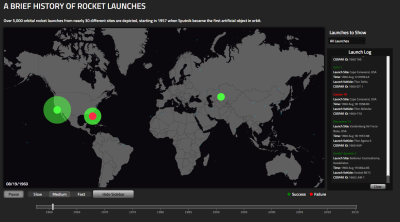

A Brief History of Rocket Launches (with Sonja Khan and Nick Reiter)

This is a data visualization made using JavaScript and the D3.js data visualization library. Data for all of the recorded orbital rocket launches from 1957 to early 2015 is shown in an interactive, animated fashion, and colors allow you to easily differentiate between successful launches and failed ones.

Magnetic Ball Levitation System

This is a system that uses an electromagnet, driven by a controller written in Simulink and running under xPC target, to stably and robustly levitate a metal ball in mid-air. Starting with mostly-assembled hardware, a colleague and I ran various tests to determine system parameters, then designed a full-state feedback controller, using gains calculated with LQR, to apply a dynamically changing electromagnetic force to balance the force of gravity on the ball. This resulted in the stable behavior you see in the video above. Done as part of a university control systems course.

PID Controller for Line-Following Robot

GitHub Repo | Accompanying Presentation

For this project, I built and tuned a PID controller to turn an already-assembled, motorized vehicle into a line-following robot. The robot carries an Arduino Uno-equivalent board, and the code for this project was written in C++. (Some supplementary code for parameter analysis, when determining PID gains, was written in Matlab.)

Markov Decision Process Solver for Inverted Pendulum Problem

This application models the approximated, discretized dynamics of an inverted pendulum balancing problem as a Markov Decision Process, and then uses Bellman value iteration to calculate an optimal state-feedback control law for the pendulum. This control law is simulated (and animated), as shown to the left.

3D Animation Engine and Animated Short

Binary and source (C++ with OpenGL) (3.7 MB)

Full animation engine, built on top of skeleton UI-only code, as a final project for an introductory computer graphics class. I wrote all of the 3D rendering code, particle effect simulation, and spline interpolation code for animation curves. This code makes use of two different particle effects; burn marks from the rover's laser and Martian soil clumps. Intersection code determines where the burn marks appear based on the laser orientation. Physical effects are modeled for particles; the soil kicked up by the rover is subject to pressure drag, gravity and kinetic friction, as well as an initial velocity whose direction that depends on the rover's current orientation.

The video above is an animated short that I created with this engine. Rover model geometry and textures are my own (but were not a priority for this project). Further details on texture and audio sources can be found in the YouTube description.

Panorama Image Stitcher

Windows Executable (6.2 MB) | Source (2.9 MB)

This is an application that takes two images as panorama input and stitches them together by computing a homography between the images and constructing an equirectangular image projection to align and stitch the images. Overlapping pixels are blended together. For this project I built a Harris corner detector as well as a RANSAC-based alignment algorithm.

This project was written in C++, using the Qt framework. Larry Zitnick at Microsoft Research wrote the majority of the UI code and the high-level architecture, and I wrote the majority of the actual stitching code.

Face Detector

Windows Executable (33.9 MB) | Source (31.3 MB)

This is an application that takes an input image and identifies human faces within the image. The application relies on a training dataset of faces and non-faces, and uses this data to compute Haar-like features as described by Viola and Jones in this paper. Next, the application uses AdaBoost to compute a classifier which is then applied to the test image to detect faces.

This project was written in C++, using the Qt framework. Larry Zitnick at Microsoft Research wrote the majority of the UI code and the high-level architecture, and I wrote the majority of the machine learning and detection code.

Small Projects

- Astrodynamical analysis of the efficiency of bielliptical transfers versus Hohmann transfers (1 | 2)

- 9x9 Sudoku solver, written in Python. (Download)

- Hangman game, written in Python. (Download)

- Text adventure set in the world of Fallout 3, written in Python. (Download)

I'm always open to learning new languages, libraries, and areas of programming. Learning new things is half the fun of programming!